Projects

Throughout my career, I've developed robotic systems for deployment across space, oil & gas, chemical, defense, nuclear, food service, and construction industries. My work has focused on the development of advanced software and hardware solutions that work reliably in real-world environments where precision and dependability are critical. My expertise spans autonomous ground and aerial vehicle platform development, computer vision and deep learning algorithm development, mixed/augmented/virutal reality application development, and human-robot interaction research. Below are some of the key projects that showcase my work, each demonstrating how intelligent robotics can solve complex challenges in high-stakes hazardous operational settings.

EgoNRG: Egocentric Navigation Robot Gesture Dataset

Developed a comprehensive gesture recognition dataset for human-robot interaction in industrial, military, and emergency response environments. Created 3,000 first-person multi-view video recordings and 160,000 annotated images using four head-mounted cameras to capture navigation gestures while operators wear Personal Protective Equipment (PPE). The dataset includes twelve navigation gestures (ten Army Field Manual-derived, one deictic, one emblem) performed by 32 participants across indoor/outdoor environments with varying clothing and background conditions. Provides joint hand-arm segmentation labels for both covered and uncovered limbs, addressing critical limitations in existing egocentric gesture recognition systems for real-world deployment in challenging operational environments.

Multi-Robot Optimal Path Planning Control Algorithm for Space Structure Assembly

New state space representations have been explored for multi-agent robotic systems, using A* and other algorithms to plan steps for robots to build structures off a CubeSat.The aim is to reconceptualize the roles and objectives of individual ARMADAS robots to enhance coordinated team effectiveness through simplified robot design using COTS parts with reduced motion complexity. Instead of walking around structures, 4 DoF robots will reside on the structure, using a bucket-brigade technique to transport voxels. For fastening, multiple, 3 DoF robots will move along a specific axis inside the structure, shifting complexity to the planning algorithms.

AR-Affordances: Obtaining Affordance Primitives from Single Demonstration to Define Manipulation Contact Tasks

This robot agnostic Learning from Demonstration (LfD) technique enables users to perform a single demonstration to define a manipulation contact-track for mobile manipulators in unstructured environments. Using a model-based, Affordance Primitive approach, mobile manipulators can navigate, plan, and execute a manipulation (e.g. turn a wheel valve) in any direction with any angle, outside the provided single demonstration trajectory.

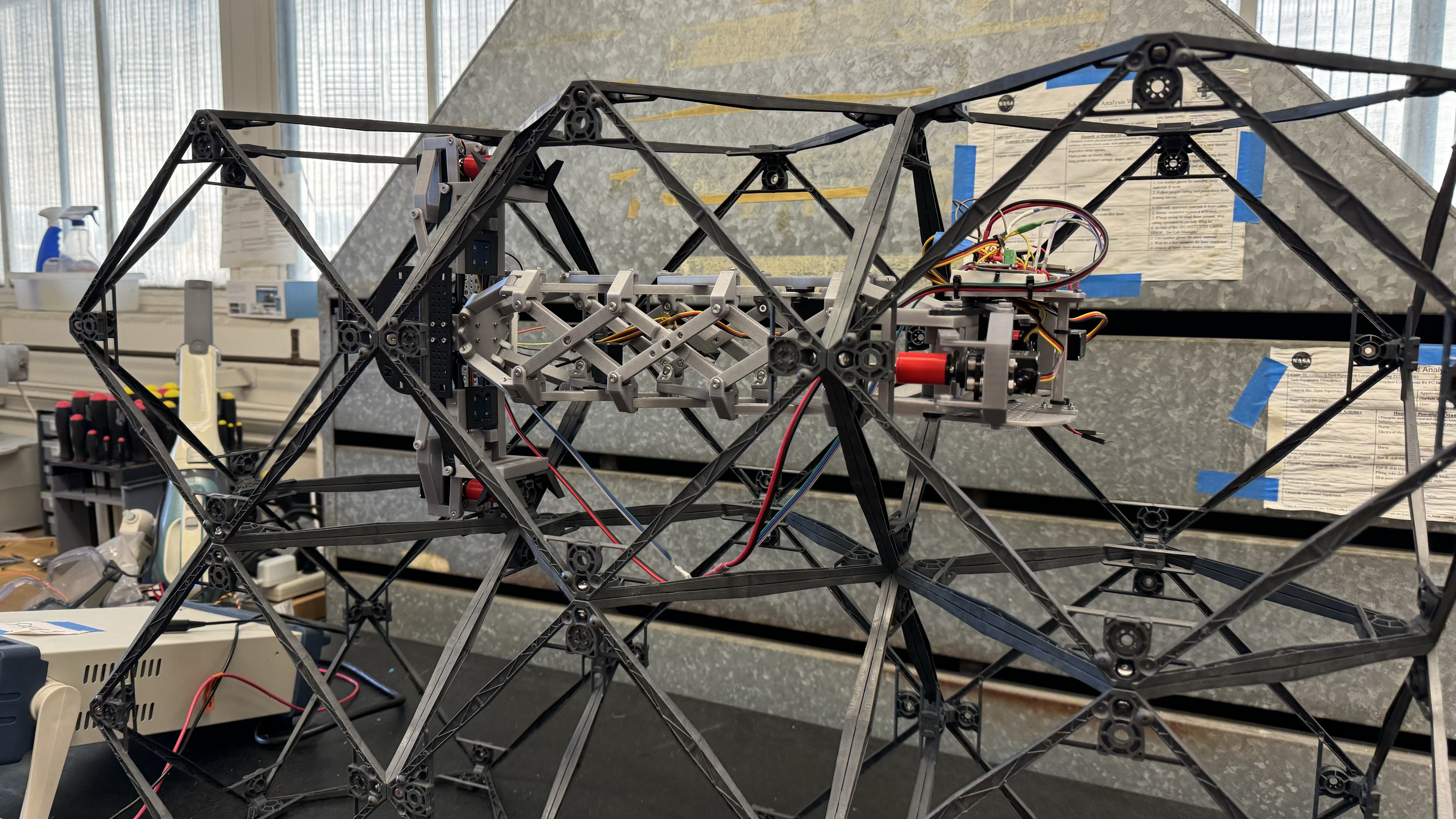

Hipless MMIC: Minimal Degree-Of-Freedom Voxel Bolting Robot for in-Space Structure Assembly

Developed a functioning prototype robot for NASA's Project ARMADAS that was a less complex version of the original MMIC-I bolting robot. The idea was to reduce the cost and complexity of the original space assembly robot while still being able to perform the same tasks, to reduce mission failure rates and decrease costs.

AR-STAR: An Augmented Reality Tool for Online Modification of Robot Point Cloud Data

During real-world robot deployments, sensor data often contains uncertainties that can compromise mission success, yet autonomous systems struggle to identify and resolve these issues on their own. To address this challenge, we created an augmented reality interface that enables human supervisors to view the robot's predictions in real-time and make corrections through three distinct interaction methods, allowing us to investigate which approaches users find most effective and intuitive.

Space Mission CubeSat ConOps Design for Assembly and Reconfiguration of Mechanical Metamaterials

NASA's ARMADAS project develops autonomous robots that build large-scale structures in space using modular lattice blocks, enabling construction of lunar bases, Martian shelters, and communication infrastructure. This work presents a scaled-down 27U CubeSat mission designed to demonstrate the system's autonomous assembly capabilities in orbit and advance its Technology Readiness Level for future deployment.

AugRE: HRI for Large Multi-Agent Teams

Command, control, and supervise heterogeneous teams of robots using an augmented reality headset. Scalable with 50+ autonomous agents, this AR-based Human Robot Interaction (HRI) framework enables users to easily localize, supervise, and command a heterogeneous fleet of robots using an augmented reality headset. This work has been published and won second place at the Horizons at Extended Robotics IROS 2023 Workshop!

Rapid Item Identification w/ Haptics

For UT Austin's ME 397 Haptics and Teleoperated Systems class with Dr. Ann Fey, my team and I developed a vibro-tactile haptic device that mounts to the HoloLens 2. The device provided sequential vibrations to the users head to help locate robots and other items of interest faster in their environment.

Remote Teleoperation via AR-HMD

This project strives to develop new methods with augmented reality devices to allow operators to confidently and effortlessly control dual-arm remote mobile robotic manipulators.

Self Driving Car (Full SLAM Dev)

For UT Austin's CS 393R Autonomous Robots class with Dr. Joydeep Biswas, my teammate and I created a complete nav-stack for autonomous navigation of an Ackermann steering car. Localization performed with a custom 2D-LiDAR particle filter and a custom RRT algorithm was created for planning.

Magnetic Inspection Robot (Full System Dev)

Tasked with creating an alternative method to inspect boiler tube walls for corrosion to eliminate the need of a human to enter into a hazardous environment, I developed a modular, wirelessly controlled, 3D printed, magnetic inspection crawler from the ground up. Chain driven by two DC motors, the crawler was able to crawl up and down the 120 ft tall vertical boiler walls.

Motion Planning & Control

For UT Austin's ME 397 Algorithms for Sensor Based Robots class with Dr. Farshid Alambeigi, my teammate and I created algorithms to control a Kuka Quantec 6DOF robotic manipulator. We used screw theory to model and control the arm, developing impedance, admittance, hand-to-eye calibration, virtual fixtures, and point-cloud registration algorithms from scratch.

Quick Release Four-Bar Mechanism

The first prototype of this Quick Release Mechanism was designed to aid researchers in the computer science department at UT Austin attempting to perform the water bottle flip challenge with a robotic arm. My partner and I worked to develop a linear translation mechanism that can slowly close (clockwise drive) and rapidly open (counter-clockwise drive) via a DC motor.

Robotic Latency Compensation

For UT Austin's ME 397 Digital Controls class with Dr. Dongmei "Maggie" Chen, my team and I created a predictive digital control to compensate for latency found in a specific AR human-robot teaming application (AugRE). We used advanced control theory including lead compensators, pole placement, linear quadratic regulators (LQR), and Smith predictors.

AR Scanning and Mapping

For my capstone at Drexel University, my team and I developed an AR tool to help construction workers easily map, scan, and superimpose building models on a spatially mapped environment. The DRACOS system integrated a HoloLens 1 AR device with a custom DJI F450 drone.

Teleoperation via Shadow Arm

For Drexel University's MEM 455 Robotics class with Dr. James Tangorra, I developed a scaled down 5R robotic manipulator that was tasked to perform a simulated disaster recovery clean-up effort. To control the robot I created a 3D printed shadow arm that mapped potentiometer readings at each joint to physical joint positions of the real arm.